Memory Management

Introduction

i.) Memory access and memory management are a very important part of modern

computer operations. Every instruction has to be fetched from memory before it

can be executed, and most instructions involve Retrieving data from memory or

storage data in memory or both.

ii.) The advent of multi-tasking OS components, The complexity of memory

management because as the process are swapped in and out of the CPU, so much

their code and data be swapped in and out of memory or at high speeds and

without interfering with any other processes.

iii.) Shared memory, virtual memory, the classification of memory as read-only

vs read-and-write and concepts like copy-on-write forking all

further complicates the issue.

Basic hardware

i.) It should be noted that from the memory chip point of view, all memory

accesses are equivalent the memory hardware doesn't know what a particular

part of memory is being used for, nor does it case. This is almost true

of the OS as well although not entirely.

ii.) The CPU can only access its registers and main memory. It cannot

make direct access to the hard drive, so any data stored these must first be

transferred into the main memory chip before the CPU can work with it.

iii.) Memory accesses to registers are very fast and CPU may be able to

execute more than one machine instructions within the same clock rate.

iv.) Memory accesses to the main memory are comparatively very slow and

may take a no. of clock rates to complete. This would require intro able

waiting by the CPU. if were not for an intermediatory fast memory cache built

into most modern CPUs, This basic idea of the cache is to transfer data

from the main memory to the cache and then to access individual locations one

at a time from the cache.

v.) User processes must be restricted so that they only access memory

allocation that belongs to the a particular process. This is usually

implemented by using a base register and a limit register for each process.

Every memory access made by a user process is checked against these two

registers, and if a memory access is all emptied outside the valid

range, then the fault error is generated the OS has access to all memory

allocations as this is necessary to swap user's code and data in and out of

the memory. It has also access to change the contents of base and limit

registers which the help of OS kernel.

Address binding

User program typically refers to to memory accesses with symbolic names such

as "I", "COUNT", "AVG", "TMP", etc. Theses symbolic names are also it

must be mapped or bound to the physical memory addresses which typically

occurs in several stages :

i.) Compile time.

If it is known at compile time where a program will reside in physical memory,

Then absolute code can be generated by the compiler, containing actual

physical address. However, if the load address change at some later time, then

the program will have to be recompiled again.

ii.) Load time

If the location at which a load program will be loaded is not known at the

compile time, then the compiler must generate relocatable code,

which refers addresses relative to the start of the program. If that stating

address change then the program must be reloaded but not recompiled.

iii.) Execution time

If the program can be moved around in memory during the course of its

execution then bending must be delayed until execution time. This requires

special hardware and is the method implemented by most modern operating

systems.

Logical Vs physical memory spaces.

i.) The address generated by the CPU is a logical address, where as the

address actually seen by the memory hardware is a physical address.

ii.) Address bound at compile time or run time have identical logical and

physical addresses.

iii.) Address created at execution time however, have different physical and

logical addresses.

a.) In this case, the logical address is also known as virtual address.

b.) The set of all logical addresses used by a program composes the

logical address space and the set of all corresponding physical

addresses composes the physical address space.

iv.) The runtime mapping of logical to physical addresses is handled by the

memory management unit (MMU).

a.) The MMU can take on many forums. one of the simplest is a modification of

the base register scheme.

b.) The base register is now termed as relocation register, Whose value is

added to every memory request at the hardware level.

v.) Note that user program never sees physical address space program work

entirely in logical address space, and any memory references or manipulations

are done using purely logical addressed only when the address gets sent to

the physical memory chips is the physical memory address generated.

Dynamic loading

Rather than loading entire program into memory at once, dynamic loading loads

up each routine as it is called, the advantage is that unused routines need

never to be loaded reducing the total memory usage and generating faster

program start-up times. The downside is the added complexity and overhead of

checking to see if routine is loaded every time it is called and then it

loads up if it is not already loaded.

Dynamic linking and shared libraries

i.)With static linking library modules get fully include in

executable modules, waste both disk space and main memory uses because every

program included a certain routine from the library would have to their own

copy of that routine linked into there execution code.

ii.) With dynamic linking, However only the stubs linked into

the executable containing reference to the actual library modules linked in

runtime.

a.) This method saves disk space, because the library routine don't need to be

fully executed in the only the stuvus.

b.) The main memory can be saved by loading only one copy of dynamically

linked routine into the memory and sharing the code among all the process that

are consequently using it. The OS must manages share routine in memory.

c.) An added benefit (dlls) also known as shared libraries or shared object

inverse easy up gradation or updates.

Swapping

The process must be loaded in the memory in order to execute, if there is no

any memory available at same time then some process in memory at the

same time then some process who are not currently using the CPU may have their

memory swiped out to a fast local disk called the packing store. If compile

time

or load- time address binding. is used, then processes must be swapped back

into the same memory allocation from which they were swapped out.

If execution time binding is used then the processes can be

swapped into the memory allocation.

Swapping is a very slow process compare to other operation

fro e.g :- if a user processor occupies 10 MB & the transfer rate for

the backing store where 40 MB/s then it would take 1/4 second just to do

the transfer of data. Adding in the residency of 8 milli-second &

ignore at size time for the moment & further recognizing the data old

data out as well new data i the over all transfer-time require for this

swapping is 500 milli-second for over half a second. For efficient

processor scheduling the CPU time slice should be significantly should be

longer than this loser time as host how much it might use. Programmer can

help with this by freeing the dynamic memory that they one no longer

using.

It is important to swap process out of the memory only

where they are ideal OS, More to the point, only when their are no

pending I/O operations otherwise, the pending I/O operations could rise

into the long process memory space. The solution is to either swap only

totally ideal process or do I/O operation only into & out of OS

buffers, which are them transferred to from processes main memory as a

second step.

Most metal OS no longer usage swapping, because it is

for slow & their are faster alternatives are available i.e :-

pages. However, some unique system will still involve stepping if the

system gets extremely full, & then discotheque swapping when the load reduces again.

Use a modified version that was somewhat controlled by user.

The swapping process out if necessary & then only swapping then

back in, when the user focused on that particular windows.

Continuous memory allocation

One approach is to memory management is to load into a contiguous space

the operating system is allocated space usually at earlier lower or higher

memory allocation & the remaining a variable memory is allocated to

the process as needed. The OS is sincerely loaded low because i.e. This in fact vector are located but at

the system, The OS was to move more comes in low memory. low memory of 640

KB barrier.

i.) Memory protection

It is against the user program accessing the area that they should not

always program to be relocated to different memory starting address as need

& allow the memory space devoted to OS to grow or shrink dynamically as

need changes.

ii.) Memory allocation

One method of allocating contagious mapping is to divide all available memory

into equal size proportion & to assign each process to their own position.

This restrict both the no. of each simultaneous processes & the maximum

size of each process, This technique is no longer in use.

An alternative approach is to keep list of each process

& unused memory block & to find the block of a suitable size.

Whenever a process needs to be loaded in the memory. There are many

different strategy for finding the best allocation of memory to process

including the the pre-most commonly stated below.

a.) First fit

Search the list of block until one is found that is big enough to

satisfied the request and design a portion of that block to that process.

Whenever fraction of block not needed by requisite is left on the free list as

a smaller block subsequent request may start looking either from the beginning

of the list or from the point at which this search ended.

b.) Best fit

Allocate the smallest block that is big enough to satisfied to request this

save large block for other process request that may be need later. But the

resulted unused portion of the block may be two small to be of any use, and

will their for be wastage keeping the free list stored can speed up the

process of finding the right block.

c.) Worst fit

Allocate the largest blocks available there by increasing the likelihood that

the remaining portion will be usable for satisfying future request.

Simulation, shows that either first of best fit are better

then worst fit in term of both time and storage utilisation. First and best

fit are about equal in term of storage utilisation, but first fit is

faster.

iii.) Fragmentations

a.) All the memory allocation strategies suffers from external

fragmentation, through first and best fit experience the problems for more so

than the worst fit. External fragmentations means that the available memory is

broken up into a lot of little pieces, non of which is big enough to

satisfy the next memory requirement although the sum total could.

b.) The amount of memory lost to fragmentation may very with algorithm,

uses patterns and some decision such as which end to save on the free list.

c.) Statistical analysis of first fit, for example - show that for 'n'

blocks of allocated memory, 0.5n will be lost to fragmentation.

d.) Internal fragmentation also occurs, with all memory allocation

strategies. This is caused by the fact that memory is allocated in blocks of a

fixed size where as the actual memory needed will rarely be that exact size

for a modern distribution of memory requests, on the average 1/2 block will be

only half-full.

A.) Note that the same effect happens with hard-drives and that Modern

hardware gives us increasily large hard-drives and memory at the expense

of every large block size, which translates to more memory lost to internal

fragmentation.

B.) In some systems use variable sizes block to minimize losses due to

internal fragmentation.

e.) If the programs in memory are relocatable (using execution time

address binding), Then the external fragmentation problem can be reduced via

computation that is moving all the processes down to one end of physical

memory. This only involves updating, the reallocation registers for each

process, as all internal work is done using logical addressing.

f.) Another solution is to allow processes to use non-continuous block

of physical memory, with the separate reallocation of register for each block.

iv.) Segmentation

Basic Methods

a.) Most uses/programmers do not think of their programs as existing in

on continuous linear address space.

b.) Rather they tend to think of their memory in multiple segments is

dedicated to a particular use, such as code, data, the stack, the heap, etc.

c.) Memory segments supports this view by providing addresses with a

segment numbers (mapped to a segment based address) and an offset from the

beginning of that segment.

d.) For example, a complier may generate five segments for the user

code, library code global (static), variables, the stacks and the heap.

v.) Segmentation hardware

A segmentable maps segment offset addresses to physical addresses and

simultaneously checks for invalid addresses, using a system similar to the

page tables and reallocation based registers. Note that each segment is kept

in contiguous memory and may be of different size, but that segment can also

be combined with paging technique of memory management.

vi.) Paging

a.) Paging is a memory management scheme that allows processer physical memory

to be discontinuation, and which eliminates problems with fragmentation by

allocating memory in equal sizes blocked know as page.

b.) Paging eliminates most of the problems of the other method discussed

previously, and is the pre-dominant memory management techniques used

nowadays.

c.) Basic Methods.

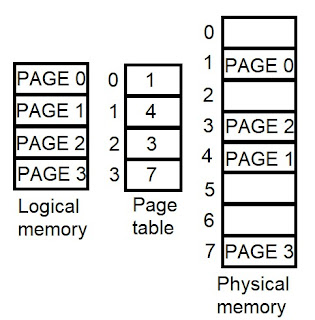

A.)The basic idea behind paging is to divide physical memory into a number of

equal size blocks called frames, and to divide programs logical memory space

into blocks of same sized called pages.

B.) The page (from and process) can be placed into any available page.

C.) The page table is used to look up what frame a particular page is stored

in at the mount.

D.) A logical address consists of two parts a page number in which the address

resides, and an offset from the beginning of that page. (The number of bits in

the page number limits how many pages a single process can address. The number

of bits in the offset determines the maximum sized of each page, and should

correspond to the system frame size.)

E.) The page table maps the page number to a frame number, to yield a

physical address which also has two parts- The frame number and the offset

with in that frame. The number bits in the frame number determines how many

frames the system can address and the number of bits in the offset determines

the size of each frame.

fig. :- Paging modal of logical and physical memory.

F.) Page numbers frame numbers and frames sizes are determined by the

architecture but are typically power of "2s" allowing addresses to be

split at a certain number of bits . For example, if the logical addresses size

is "2m" and "m-n" bits of logical address doesn't need the page number and the

remaining bits represents the offsets.

G.) Also note that the number of bits in the page number and the number of

bits in the frame number do not have to be identical. The former determines

the address rang of the logical address space and the later relates to the

physical address space.

H.) DOS used to use an addressing scheme with 16 bit frame numbers and 16 bit

offsets, on hardware that only supports24bits hardware addresses. The result

was a resolution of staring frame addresses finer then the size of a single

frame, and multiple efforts combination that mapped to the same physical

hardware.

Consider the following micro ex in which a process has 16

bits of logical memory, mapped consuming the remaining 16 bits of physical

memory.

I.) Note that paging is li8ke having a table of relocatable registers, one for

each page of the logical memory.

J.) There is no external fragmentation with paging. All blocks of physical

memory are used and there are no gaps in between and no problem with finding

the right sized blocks, for a particular chunk of the memory.

K.) There is however, internal fragmentation memory allocated in chunk of size

of a page and on the average the last page will only be half-full, wasting of

a average half a page of memory per processes are keeping their code and

data in separate pages.

L.) Large page sizes waste more memory but are more efficient in terms of

overhead. Modern trends have been to increase page sizes and some systems even

have multiple page sizes to try and make the best of both words.

M.) The page table increases (from numbers) are typically 32 bits numbers,

allowing access to 232 physical memory 32+12=44 bits of physical address

space.

N.) When a process request memory (when its code is loaded in from disk), free frames are allocated from a free frames list, and instead into that process page table.

O.) Processes are blocked from accessing anyone else memory because all of their memory requests are mapped through their page table. There is no way for them to generate an address that maps into any other process memory space.

P.) The OS must keep of each individual process page table, updating it whenever the process page get moved in and out of memory and applying the correct page table when processing system calls for a particular process. This all increases the overhead involves when swapping processor in and out of the CPU.

Hardware Support

i.) Page lookups must be done for every memory reference and whenever a process gets swapped in or out of the CPU, its paging table must be swapped in and out too along with instruction registers etc. It is therefore appreciated to provide hardware support for this operation, in order to make it as fast as possible. And to make process- switching also as fast as possible.

ii.) One option is used a set of register for page table.

iii.) An alternate option is used to store the page table in main memory and to use a single register called page table base register (PTBR), to record where in memory the page table is located.

a.) Process-switching is fast, because only a single register needs to be changed.

b.) However, memory access just got half as fast because every memory access now requires two memory accesses - one to fetch the frame number from memory and then another one to access the desired memory location.

c.) The solution to this problem is to use a very special high-speed memory device called translation took- aside buffer (TLB).

1.) the benefit of the TLB is that it can search an entire table for a key value in parallel and if a key value in parallel and if is found anywhere in the table then the corresponding look-up value is returned.

2.) The TLB is very expensive, however and therefore very small (not large enough to hold the entire page table) It is therefore used as a cache.

3.) Address are first checked against the TLB and if the information is not there then the frame is looked up from the main memory and the TLB is Updated.

4.) If the TLB is full, then replace strategy from least recent used (LRU) to random.

5.) some TLBs allow some entire to be wired down. Which means that they can't be removed from the TLB. Typically these would be kernel frames.

6.) Some TLBs store address space, identifies (ASITs) to keep track of which process owns a particular entry in the TLB. This allows entries from multiple processes to be stored simultaneously in the TLB without granting one process address to some other process memory location without this feature, The TLB has to flues in with every process switch.

Protection

i.) The page table can also help to prefect processes from accessing memory that they shouldn't or their own memory in ways that they shouldn't.

ii.) A bit or bits can be added to the page table to classify the page as read-write, read-only, read-write-execute or some combination of these short of things.

iii.) Valid/invalid bits can be added to mask off entries in the page table that are not in use by the current process.

iv.) Note that the valid/invalid bits describe cannot block all illegal memory accesses due to internal fragmentation. Areas of memory in the last page that are not entirely filled by the process and may contain data left by the previous process used that last frame.

v.) Many processes do not use all of the page table available to them particularly in modern systems with very large potential page tables. Rather than waste memory by chatting a full-size page table for every process, some system use a page table length register (PTLR) to specify the length of the page table.

Shared Pages

i.) paging system can make it very easy to share blokes of memory by simply duplicating the page numbers into multiple frames. This may be done with either code or data.

ii.) If code is reentered, That means it doesn't write to change that code in any way and it is therefore safe to re-enter it. More importantly, it means the code can be shared by multiple processes, as long as each have their own copy of the data and registers, including the instruction register.

iii.) Some systems also implement shared memory in a fashion.

Virtual Memory

i.) As we know how to avoid memory fragmentation by breaking process memory's requirements down into smaller bits (pages ), and storing the pages no-continuously in memory. However, The entire process still had to be stored in memory somewhere.

ii.) In practice, most real processes don't need all their pages, or at lest not all at the once, for several reasons:

a.) Error-heading code is not needed unless that specified error occurs, some of which are quite rare.

b.) Arrays are often over-sized for worst case scenarios and only a small fraction of array are actually used in practice.

c.) Certain features of certain programs are rarely used, such as the routine to balance the frugal budget.

iii.) The ability to load only the portion of processes that were actually needed has several benefits :

a.) Program could be written for a much larger address space (virtual memory space) than physical exits on a compute.

b.) Because each process is only using a fraction of their total address space, There is more memory for other programs, including CPU utilization and system through-puts.

c.) Less I/O is needed for swapping processes in and out of RAM, speeding things up.

iv.) Virtual address space which is the program logical view of the process memory storage is done on the secondary storage device. The actual physical layout is controlled by process page table.

v.) Note that the address space on the hard disks drive (HDD) secondary storage as a great page in the middle of the address space which is never used unless the stock or the heap grows to fill the pages.

vi.) Virtual memory also allows the sharing of files and memory by multiple processes with several benefits.

a.) System libraries can be shared by mapping them into the virtual addition space of more then one process.

b.) Processes can also share virtual memory by mapping the same block of memory to more than one process.

c.) Process page can be shared during a fork system call, eliminating the need to copy all the pages of the original (parent) process.

Demand Paging

The basic idea behind demand paging is that when a process is swapped in its pages are not swapped in at all once. Rather they are swapped in only when they process needs them (on demand). This is termed as lazy swapper, although Prager is a more accurate term.

Basic Concepts

i.) Basic idea behind paging is that when a process is swapped in, The pager only loads in memory these pages that it expect the process to need.

ii.) Page that are not loaded into memory are marked as invalid in the page table, using the invalid in the page of the table, using the invalid bits (The rest of the page table entry may either be blank or contain information about where to find the swapped-out page on the hard drive).

iii.) If the process only every accesses pages that are loaded in memory (memory resident pages), then the pages were loaded into memory.

iv.) On the other hand, if a page needed that was not originally loaded up, Then the page fault trap is generated which must be handled in a series of following steps:

a.) The memory address requested is first checked to make suru that it was a valid memory request.

b.) If the reference was invalid, the process is terminated. Otherwise, the page must be paged in.

c.) A free frame is located, possibly from a free frame list.

d.) A disc operation is scheduled to bring in the necessary page from disc (this will usually block the process on an I/O Walt, allowing some other processes to use the CPU in the meantime).

e.) When the I/O operation is complete the process page table is updated with the new frame number, and the invalid bit is changed to indicate that this is not a valid page reference.

f.) The instruction that paused the page fault must not to be restored from the begging (as soon as this process gets another term on the CPU).

v.) In an extreme case no pages are swapped in for a process until they are requested by page faults. This is know as pure demand paging.

vi.) In theory, each instruction could generate multiple page faults. In practice, their is very rare, due to local reference of memory.

vi.) The hardware necessary to support virtual memory is the same as for paging and swapping.

vii.) The crucial part of the process is that the instruction must be restarted from scratch once the desired page has been made available in memory. For most simple instructions, this not a major difficulty. However, there are same architectures that allow a single instruction to modify a fairly large block of data, which may spam a page boundary and if some of these data gets modified before the page fault occurs this could cause problems one solution is to access both ends of the block before executing the instruction, guaranteeing that the necessary pages in before the introduction begins.

Performance of demand paging

i.) There is some slowdown in performance its, whenever a page fault occurs and the system has to go get it from memory, to understand this how big the problem is, refer point (ii).

ii.) There are many steps that occur when serving a page fault and some of the steps are optional or variable. Swapped that a normal memory access required 200 nanoseconds, and that serving a page fault takes a millisecond (80,00,000 nanosecond or 40.000 times) with a page fault error of P that affects the access time is now,

(P-1) (200) + P*80,00,000

= 200 + 79,99,800P

Which clearly depends heavenly on P even if only one access in 1000 causes a page fault, the effective access time drops from 200 nanoseconds to 8.2 microseconds a slowdown of a factor of 40 times. In order to page fault rate must be less than 10% the page fault rate must be less than 0.0000025 or 1 in 3,99,990 accesses.

iii.) where as, swap space faster to access than a regular file system, because it doesn't have to go through the whole directory structure for this reason. some systems will transfer an entire process from the file system to swap space before starting up the process so that future paging all occurs from the fastest swap space.

iv.) Some systems use demand paging directly from the file system for binary codes (which Neve changes and does not have to stored on a page operation) and to reserve the swap space for the data segments must be stored. This approach is used by both Solaris and BSD unites.

Copy-on-write technique

i.) The idea behind copy-on-write is that the pages for a parent process do not have to be actually copied until one or the other of the processes changes the pages. They can be simply shared between the two processes in the meantime, with a bit set that the page needs to be copied if it ever gets written to this a reasonable approach since the child process usually issued on Excel. system call immediately offer the fork.

ii.) Obviously, one pages that can be modified even need to be labelled as copy-on-write old segment can simply be shared.

iii.) Pages used to satisfy copy-on-write duplication are typically allocated using the zero-fill-on-demand meaning that previous contents are zero before the copy proceeds.

iv.) Some systems provide an alternative to the fork (system call called virtual memory fork or fork (j). In this case, the parent is suspended and the child uses the parent's memory pages this is very fault for process creation but require that the child not modify any of the shared memory pages before performing the Excel) system call.

Page replacement

i.) In order to make the most use of virtual memory reload several processes into memory at the same time since we only load the pages that are actually needed by each process at any given time, there we had to load in the entire process.

ii.) However, memory is also needed for other purposes such as I/O buffering and what happens if some process suddenly decides it needs more pages and there are not any free frames available. The several possibilities for such cases are :-

a.) Adjust the memory used by I/O buffering etc. to free up some from for user processes. The decision of how to allocate memory for I/O vs user processes is a complex task, yielding different policies on different systems some systems allocate fixed amount of I/O where as others let the I/O system contained for memory along with everything else.

b.) Put the process request more page into a wait queue until some free frames become available.

c.) swap some processes out of memory completely freeing up its page frame.

d.) Find some page in memory that is not begging used right now, and swap that page only out to disc, freeing up a frame that can be allocated to the process requesting it. This is known as page replacement and is the most common solution. There are many different algorithm for page replacement which is subject of the remainder options.

Basic page replacement

i.) The page fault processing assumed that there would be free frame list now the page fault must be modified to free up the frames if necessary as follows:

a.) Find the location of the desired page on the disc, either in swap space or in the file system.

b.) Find the free frame, if there is a free frame their use it otherwise use the the page replacement algorithm to select the select the existing frame to be replaced which is known as victim frame, then write the victim frame to disc and change all the related page table to indicate that this page is no longer in memory.

c.) Read in the desired page and store it in the frame with adjustment to all related pages and frame tables to indicate the change.

d.) Restart the process that is waiting for this page.

ii.) Note that in step (b) adds an extra disk write to the page fault handing effectively doubling the time required to process a page fault, this can be some what by assigning a modify bit or ditty bit to each page indicating whether or not it has been set, then the page is unchanged and does not need to be written out to disk otherwise the page write is required. It should come as no surprise that many page replacement strategies specifically look for pages that don't have their dirty bit set and select clean page as victim page, It should also be obvious that modifiable code pages never get their dirty bit set.

iii.) There are two major requirements to implement a successful demand paging system we must develop a frame allocation algorithm the former centers around how many frames are needs, and the latter deals with how to select a page for replacement when there are no free frames available.

iv.) The overall goal in selecting and queuing these algorithm is to generate a few no. of improvements for avoiding page faults. Because the disc access is too slow as compared to memory access and even slights improvements to these algorithms can gives us overall system performance.

Allocation of frames

1. Minimum number of frames

i.) The absolute minimum number of frames that a process must be allocated is dependent on system architecture, and corresponding to the worst-case scenario of the number of pages that could be touched by a single (machine) instruction.

ii.) If an instruction (and its operands) spans a page boundary, the multiple pages could be needed just for the instruction fetch.

iii.) Memory reference in an instruction touch more pages, and if those memory locations can span page boundaries, then multiple pages could be needed for operand access also.

iv.) The worst case involves indirect addressing are allowed. left unchecked , a pointer to a pointer to a pointer ta a ............ could theoretically touch every page in the virtual address space in a single machine instruction, requiring every virtual page be loaded into physical memory simultaneously. For this reason architectures place a limit (say 16) on the number of levels of indirection allowed in an instruction which is enforced with a counter initialize to the limit and decrement with every level of indirection in an instruction - if the counter reaches zero, then an "excessive indirection" trap occurs. That's why the minimum frame allocation of per process is required.

2. Allocation algorithm

i.) Equal allocation

if there are m frames available and n processes to share them, each process gets m/n frames, and the left overs are kept in a free-frame buffer pool.

ii.) Proportional allocation

Allocation the frames proportionally to the size of the process, relative to the total size of all process i is s_i, and s is the sum of all s_i, Then the allocation for process p_i is a_i = m*s_i/s.

iii.) Variation an proportional allocation could consider priority of process rathe than just their size.

iv.) All allocation fluctuate over time as the number of available free frames, m, fluctuates and all are also subject to the constraints of minimum allocation. if the minimum allocation cannot be met, then processes must either be swapped out or not allowed to start until more free frames become available.

No comments:

Post a Comment